macOS Big Sur telling Apple what app you’ve opened isn’t a security or privacy issue

[ad_1]

After a problem on Thursday, Apple’s app security measures have come under fire for reporting back what users are running on their Mac. But, the privacy concerns of bad actors potentially monitoring app usage are not be as big an issue as one researcher suggests.

On Thursday, macOS users reported issues trying to upgrade the operating system to macOS Big Sur, while others ended up having trouble running applications even without upgrading. The problem was determined to be server-related, with an issue on Apple’s side preventing Apple’s certificate checking function from working properly.

That same service has been picked up by security researcher Jeffrey Paul, founder of an application security and operational security consulting firm. In a lengthy piece written on Thursday, Paul attempted to raise awareness of a perceived privacy issue within macOS, namely that it seemingly reports back to Apple what apps are being opened up by a user.

According to Paul, Apple’s communications between the Mac and specific servers can be coupled with data stemming from an IP address in such a way that it can create a mass of metadata about a user’s actions. This would include where they are and when, as well as details of their computer and what software they’re running.

By collecting this data over time, this can supposedly create an archive that could easily be mined by bad actors, giving what could be considerable abilities to perform surveillance on a mass scale, possibly levels to the infamous and now shut down PRISM surveillance program.

The problem is, it’s nowhere even close to that dramatic, and nowhere near that bad. And, if they were so inclined, the ISPs have the ability to harvest way more data on users with just general Internet usage than Gatekeeper ever surrenders.

How Gatekeeper works

Apple includes various security features in its operating systems, and macOS is no exception. To prevent the potential use of malware in apps, Apple requires developers to undergo various processes to make the apps function on macOS.

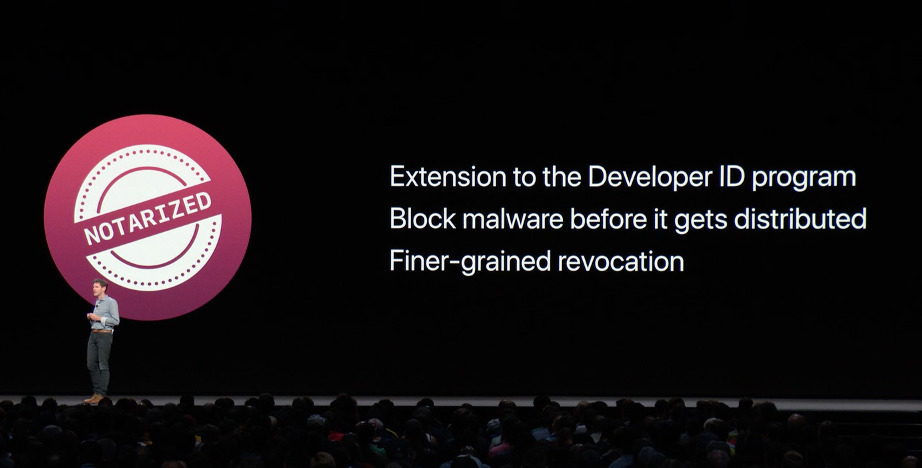

Along with creating security certificates, which can help confirm an app from a developer is authorized and genuine, Apple also mandates that apps undergo a notarization process. Registered developers send apps to Apple, which are scanned for security issues and malicious code, before being given the OK by the company.

Apple introduced the notarization process to developers in 2018

This means that apps are typically protected by being signed by a Developer ID that Apple is aware of, as well as being checked by Apple itself, before being able to be run in macOS itself. Signed security certificates identify the app’s creator as authorized, while notarization minimizes the chance of an app executable being modified to carry malware.

Security certificates applying to an app or a developer can be revoked at any time, allowing for the quick deactivation of apps that are known to have malware or have gone rogue in some way. While this has led to issues in some cases, such as certificates expiring and causing apps to fail until developers renew them with a new version of the app, the system has largely been a success.

A communications breakdown

The problem area here is in how Gatekeeper, the security feature that manages this form of security, actually performs the task in the first place. As part of the process, it communicates with Apple’s Online Certificate Status Protocol (OCSP) responder, which confirms certificates for Gatekeeper.

This communication involves macOS sending over a hash, a unique identifier of the program that needs to be checked.

A hash is a known string of characters that can be created using an algorithm on a block of data, such as a document or an executable file. It can be an effective way of confirming if a file has been meddled with since the hash generated from the adjusted file will almost certainly differ from the expected hash result, indicating something went wrong.

Hashes are created from the application file in macOS and sent to the OCSP for checking against the hash for the application it knows about. The OCSP then sends a response back, typically whether the file is genuine or if it has been corrupted in some way, based just on this hash value.

The failure to execute software in macOS or to perform the upgrade was caused due to the OCSP being overwhelmed by requests, causing it to run extremely slowly and not provide adequate responses in return.

Making a hash of things

Page reasons that these known hashes effectively report back to Apple what you are running and when. Furthermore, when mapped to an IP address for basic geolocation and being connected in some form to a user ID, such as Apple ID, this can enable Apple to “know when you open Premiere over at a friend’s house on their Wi-Fi, and they know when you open Tor Browser in a hotel on a trip to another city.”

Apple’s theoretical knowledge is one thing, but Page points out that these OCSP request hashes are transmitted openly and without encryption. Readable in the open by anyone analyzing packets of data, this information could be used in the same way by an ISP or “anyone who has tapped their cables,” or has access to a third-party content delivery network used by Apple, to perform PRISM-style monitoring of users.

“This data amounts to a tremendous trove of data about your life and habits, and allows someone possessing all of it to identify your movement and activity patterns,” writes Page. “For some people, this can even pose a physical danger to them.”

It is plausible for someone to determine what application you ran at a specific time by analyzing the hash and having enough hashes at your disposal to figure out which hash means. There are many tools available to security experts to analyze hashes, so it wouldn’t be unreasonable for someone with sufficient resources, data storage, and computing power to do the same.

However there’s not really much utility in knowing just what app is being launched, realistically speaking. And, the ISPs could have that data if they wanted to without the limited info that Apple’s Gatekeeper may provide.

For the majority of these hashes, it will consist of largely unusable data, even if it is identifiable, due to the genericness or the high use cases of some apps. There’s not much information you could gather on a user by knowing they launched Safari or Chrome, as the hash states the app but not what they are looking at.

It’s doubtful that any nation state would care if they see someone opened up macOS’ Preview app 15 times in a row. There’s certainly edge cases, such as for applications with highly specific uses that may be of interest to third parties, but they are few and far between, and it would probably be easier to gather data through other means rather than acknowledging an app has opened.

You don’t have to look at the hashes to work out what the target user is running. Since applications tend to run on specific ports or port ranges, anyone who is in the same position of monitoring packets of data can similarly determine what application has just been run by checking what ports the data relates to.

For example, port 80 is famously known to be used for HTTP, or your standard web traffic, while 1119 can be used by Blizzard’s Battle.net for gaming. Arguably you could change the port that an application communicates through, but on a mass surveillance basis, its operators are going to be looking out for port 23399 as a sign for Skype calls, or 8337 for VMware.

When traffic to and from 1119 stops, for instance, then the ISP could figure out that you’re done playing Warcraft. Gatekeeper doesn’t do this.

Sure, there’s theoretically potential for a PRISM-style spying program here with the entirety of ISP data plus port monitoring. But, it’s of extremely low utility to those who would want to set up such a thing.

“User 384K66478 has opened Runescape at 18:22″ which is the absolute most that Gatekeeper could expose, is of no help to anybody.

It’s not entirely new, nor is it secret

It is worth pointing out that this potential use case for data isn’t something that is a recent issue for Apple users. Apple has employed Gatekeeper to check certificates with server-based confirmation since it was first implemented in 2012, so it has been active for quite some time already.

If it were a privacy problem as framed by Paul — and it isn’t — it would have been one for quite a few years.

The system of using online servers to confirm the validity of an app isn’t even limited to macOS, as Apple uses a similar validation process for the iOS ecosystem. There’s even enterprise security certificates that allow apps to bypass Apple’s App Store rules in small quantities, but even they are revokable in a similar fashion, as demonstrated by Facebook in early 2019.

Microsoft has its own Device Guard, security features in Windows 10 to fight malware that take advantage of code signing and sending hashes back to Microsoft to enable or deny apps from running. Part of this entails communicating with servers to confirm whether apps are signed correctly.

Paul also frames the feature as being a largely secretive thing that users aren’t aware about, something that could surreptitiously be used to monitor usage habits. However, given that there’s so many companies collecting data on users, such as online advertising firms and social networks, it would probably be unsurprising to most users that dispatches to Apple regularly take place, especially for security reasons.

Ungraceful failure and “unblockable” messaging

One element that Paul latches onto is how Apple is introducing a change as part of macOS Big Sur that alters how the system functions. In previous versions of macOS, it was possible to block the requests to the OCSP from the daemon “trustd” by a firewall or by using a VPN, enabling the system to “fail quiet.”

The hash-checking system normally sends the hash to OCSP and expects two responses: an acknowledgment of receipt of the hash followed by a second that either approves or denies the hash as genuine. If the first acknowledgment is received, trustd will sit and wait for the second response to come through.

The issue that played out on Thursday was this precise scenario, as the acknowledgments were sent, but the second part was not. This led to applications failing to launch as approval was supposedly on the way, but didn’t arrive.

Hey Apple users:

If you’re now experiencing hangs launching apps on the Mac, I figured out the problem using Little Snitch.

It’s trustd connecting to https://t.co/FzIGwbGRan

Denying that connection fixes it, because OCSP is a soft failure.

(Disconnect internet also fixes.) pic.twitter.com/w9YciFltrb

— Jeff Johnson (@lapcatsoftware) November 12, 2020

This plays into Paul’s declaration as blocking access to the OCSP means the initial request cannot reach the server, meaning there’s no initial acknowledgment nor approval. Since the issues lie in receiving the acknowledgment in the first place, blocking access prevents the acknowledgment from being sent from the server, negating the issue.

The “fail quiet” element is beneficial to the user as the entire system will allow the app to run anyway, as it’s not been informed by the seemingly-offline OCSP, and so continues as normal.

A reference is made to Jamf principle security researcher Patrick Wardle, who determined Apple added trustd to the “ContentFilterExclusionList,” a list of services and other elements that cannot be blocked by on-system firewalls or VPNs. Since it’s unblockable, an attempt to contact OCSP will always be made, which means the Mac will always phone home.

Of course, this isn’t something that is entirely unblockable. Offline Macs cannot use the security facility, and for those that are online, there’s the possibility of using filtering rules on a home router or on a corporate network to block that specific traffic, and there are feasibly ways to do similar blocking on the move using a travel router.

Hashing it out

If this all surfaced at around the time PRISM was still a thing to be concerned about, it would be worth caring more about. More data for the metadata-consuming surveillance machine to ponder over, and more information for governments to use about its citizens.

But, obviously, it’s not. Time has passed, PRISM is no more and has been gone for over a year, and the general public are extremely aware that data is being created every day based on people’s activities and actions. Users have lost their innocence and are no longer ignorant to the situation they find themselves in.

Dressing this up as a potential leak of personal data may have made sense a few years ago, but not now.

Given the information is basically the small possibility someone online determines through considerable work that someone has opened Safari for the 47th time in a day, and it seems like small potatoes. Add in that far more data can be acquired with less effort by ISPs by monitoring ports, and those potatoes are getting tinier.

That you can acquire far better and actionable data through other methods makes this pretty mundane on the grand scale of things. There’s not even the prospect of Apple pulling a Google and using this data, as Apple has been a voracious defender of user privacy for many years, and it is unlikely to make such a move.

There’s no privacy battle to be made, started, or escalated, here.

Transparency is better

During those two hours on Thursday when Gatekeeper was preventing some users from opening some apps, Apple was silent. It’s still silent about the cause, what happened, and why.

Gatekeeper “calling home” is discussed indirectly in Apple’s terms of service, but, as with most of its high-visibility failures, it could be more transparent about it. It could tell users what it is doing with the Gatekeeper hashes, instead of making us guess if they are retaining the hashes, or using them and discarding them.

This is something that Apple can easily do, given how open it is about other security features it offers in its products. It is entirely possible for this to be handled in a similar publicly-transparent manner by Apple, such as the introduction of anonymous data sharing in its COVID-19 screening app.

It may be difficult to do so given the vocal opinions insinuating this could be part of a PRISM-like system, but it would be possible. Apple just has to lay it out to the public and offer assurances that there isn’t anything untoward taking place.

Apple just has to be a little clearer and louder.

[ad_2]

Source link

You miss the reason that Apple can effectively not only see what you’re using (it’s none of their business and also potential competitive knowledge), but also can decide what apps are allowed to run, aka the can instantly block an app with or without good reasons. PC’s were an open platform. The sliding slope is towards a controlled closed environment which is not what most professionals want. The question is if there are still ways around it in macOS 11 since that OS even circumvents any local firewall apps for OS functionality which is very questionable.