Last Week on My Mac: Are your apps built to get the best from Apple silicon?

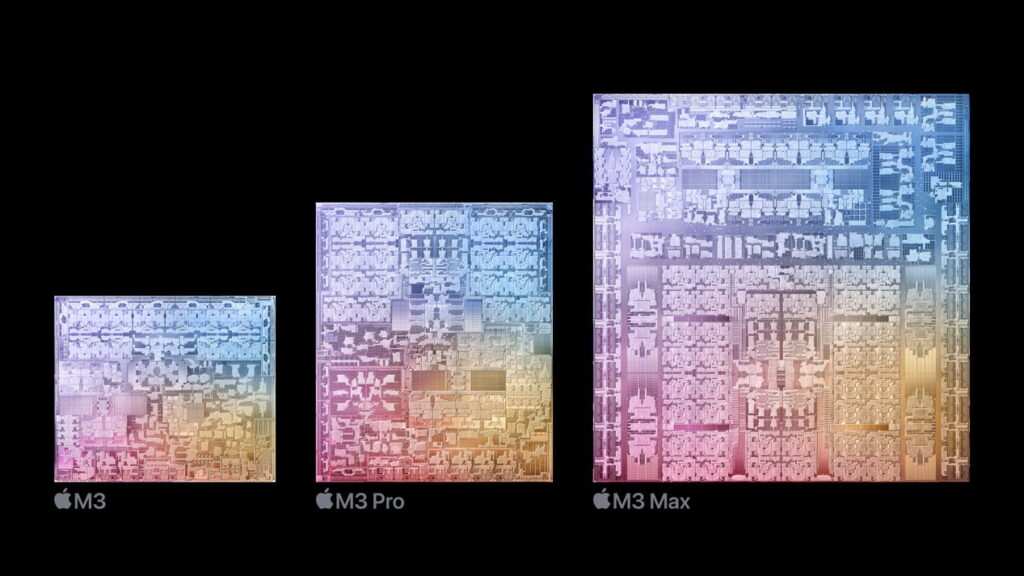

Looking at Apple’s images of the dies in its M3 chips, it’s amazing how little we know about them. What we do know is there’s much more than meets the eye, or Apple cares to mention. We’ve been told about their CPU and GPU cores, their types and numbers, about neural engines, and that’s about it. Painstaking work by independent researchers has identified a few of the specialist processing units seen in these images, but much of the structure of Apple’s M-series chips remains speculation.

Among those great unknowns in Apple silicon is a complete co-processor known as the AMX, apparently specialising in vector and matrix maths. If that seems even more esoteric than a neural engine, bear in mind that it’s designed to accelerate processing by performing multiple operations simultaneously. Multiple CPU cores enable us to run multiple apps at the same time, and for those apps to run multiple threads at once. While those allow our apps to run faster, for real performance improvements they also need to process smaller tasks in parallel. Instead of iterating through arrays of numbers one at a time, specialist processing units perform operations on arrays, as vectors and matrices.

Those functions are now commonplace, whether in images, video, audio, or 3D data. Matrix multiplication is one of the more straightforward of them to compare: it can be implemented in classical code and run on the Performance (P) cores, or the code can call one of the functions in Apple’s Accelerate library to do the job as efficiently as Apple’s engineers can provide, in this case most probably using the AMX co-processor.

When using 16 x 16 matrices containing 32-bit floating-point numbers, a single P core in an M3 Pro chip takes around 0.08 seconds to perform 10,000 matrix multiplications. That’s already a huge improvement on a P core in an M1 Max, which takes 0.17 seconds or so, over twice the time. Convert that code so that it calls an Accelerate function, vDSP_mmul, and increase the number of loops to take account of the change in speed. Using Accelerate, the M1 Max is over 80 times faster than using the P core alone, and the M3 Pro is more than 40 times faster.

Express those in terms of floating-point operations per second, and the M3 Pro Accelerates to 50 GFLOP/s from the P core value of 1.1 GFLOP/s. Those are huge differences in terms of how fast code runs, and in many cases will transform a feature that couldn’t be performed in real time into something that appears instant to the user.

Their impact is more extensive than mere performance as it extends to energy efficiency. When run on an M3 P core using classical code, 200,000 loops requires just under 10 J, whereas ten million loops of the Accelerate version requires less than 9 J. Expressed in comparable terms, the P core version of matrix multiplication takes 49 J per million matrix multiplications, while the Accelerate version requires just 0.9 J/million, less than one-fiftieth of the energy. Converting code from classical form to get best use from an M-series chip thus not only makes it run much faster, but also greatly reduces its energy requirement, so extending the time between recharging the battery in a notebook.

These figures have other implications too. Trying to compare performance and energy efficiency of Macs and PCs isn’t easy. If code being used doesn’t take full advantage of these features in M-series chips, while it might seem more ‘fair’, it’s also unrepresentative of real-world performance, where you’d expect those developing for macOS would take advantage of them. One of the first questions we should be asking of any benchmark is thus whether it takes full advantage of Accelerate and other Apple-specific features.

More important questions apply to cross-platform and legacy code in the apps we use. Although the Accelerate library has been available for 20 years, since Mac OS X 10.3, and there’s no reason for old code not to have used it, in the past its benefits may not have been as clear, and to some developers it might have been seen as a bit too specialist. I suspect there’s a lot of code in the commercial apps we use that could be improved greatly, both in terms of performance and energy efficiency, by their making calls to library functions rather than adopting a more classical approach.

For legacy code to take full advantage of Accelerate, its developers have to identify the sections of code that need to be improved, recast them in terms of vector and matrix variables, then rewrite the code using structures for the appropriate library function call. Although it’s tempting to suggest that modern AI methods could do that, they depend on words and example code, both of which appear to be in short supply for Accelerate.

Much thus depends on how much of your costly subscription is being invested in engineering effort, and how prepared vendors are to spend on improving macOS versions of their products. For the user, the only reliable test is to check the app out on demanding tasks. If it fails to show substantial improvement in performance when running on Apple silicon, and drains the battery, you’ll know it’s a lemon, and has just been hastily rebuilt to run native on Apple silicon without getting the best from the chips.

As Apple enters the third cycle of its M-series chips, we’re now entering the time in which some apps will win because of the effort invested in getting the best from this new architecture, and others will fall by the wayside as they’re outmoded and outclassed. Faites vos jeux! (Place your bets.)

Further reading

Evaluating the M3 Pro: Summary

Finding and evaluating AMX co-processors in Apple silicon chips

Why apps need to Accelerate

Appendix: Source code

In the ‘classical’ CPU implementation, matrices A, B and C are each 16 x 16 Floats for simplicity, and the following is the loop that is repeated theMReps times for the test. Because of the slow performance of this code, theMReps scales down the number of repeats from millions to thousands.

In the Accelerate implementation, the vDSP_mmul() function is called on the three matrices A, B and C, each 16 x 16 Floats. A and B are immutable on the right hand side of the equation, and C is mutable to contain results. The number of repeats theReps is here unscaled, and set in millions. Note that the only iteration is running the set number of repeats, as Accelerate consumes the matrices whole.

Of course in reality each iteration would require setting up matrices A, B and C, but for these purposes that overhead isn’t included in the test.