iCloud Drive in Sonoma: Mechanisms, throttling and system limits

Last week I looked at how macOS Sonoma handles copying a single file into iCloud Drive. Sometimes details become clearer when you don’t stop at one, but move a whole folder of 20 small files at once. In particular, I wanted to look more carefully at how files are chunked for storage in iCloud, whether throttling could limit performance, and what the limits on iCloud Drive performance are.

Test

I created a folder containing 20 small Rich Text files, and simply dragged that into an existing folder in my iCloud Drive. I have Optimise Mac Storage on, but not Desktop & Documents, and the folder that I moved the test folder into was marked as being evicted, although some of its contents have been materialised (downloaded) locally.

To estimate the chunk size used by MMCS, I moved several files of different sizes up to 28 MB, and read entries in the log from MMCS reporting the number of chunks it made from them.

I captured the log record for a period of ten seconds starting with the folder move using the ready-made feature in Mints (essentially the same as that in Cirrus).

iCloud Drive folder upload

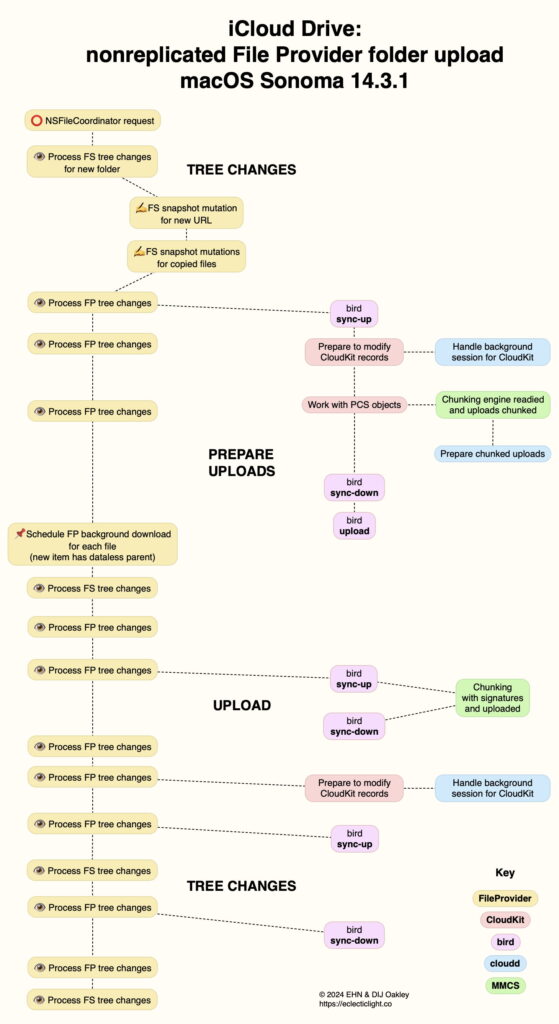

In broad terms, my previous diagram showing stages required to copy up a single folder appeared reasonably accurate, although details of the chunking and chunk upload performed by MMCS aren’t correct. Here’s my updated account as a diagram.

My previous test used a single file copied by Cirrus; with a folder moved in the Finder, this elicits an NSFileCoordinator request, at the top left. FileProvider then processes server (FS) changes resulting from the creation of the new folder in iCloud Drive, going through a similar process of ‘snapshot mutations’ for that and the files to be copied.

Following those, bird reports the start of its sync-up phase, CloudKit prepares to modify the records in iCloud’s database, and MMCS, the chunking engine used to package file data up for transmission and storage, starts up and chunks the files to be uploaded. At this stage, though, no chunks are uploaded.

bird then reports sync-down, after which it notes the start of the upload phase. FileProvider schedules background downloads for each file, as the new folder has a dataless (evicted) parent folder. It then busies itself processing FileProvider local and server tree changes. Throughout this process, FileProvider and other processes work on the whole batch of 20 files, rather than passing them through a processing pipeline one at a time.

bird reports another sync-up, when MMCS adds signatures and keys to the file data chunks, and they’re uploaded to the iCloud server with cloudd activating the connection and CloudKit recording and checking any errors. After that bird calls sync-down for the final phase of tree changes. CloudKit prepares to modify its records, in a background session of cloudd. FileProvider processes a long succession of local and server tree changes, which bird reports are synced up, with a final sync-down.

Throttling

During these log explorations, I have noted the occasional report of throttling occurring during transactions with iCloud Drive. One of the reasons for moving a folder of 20 files was to see if I could trigger it, but I’m delighted to report that failed, and I can see no evidence that copying up a larger number of smaller files to iCloud Drive should be throttled in Apple’s meaning of the term, where requests to CloudKit are blocked for a fixed period.

I did, though, observe two instances of fileproviderd, the FileProvider service, being subjected to a throttle, one for a period of 0.2 s, the other for 0.4 s. What FileProvider does in that circumstance is to create a new watcher to retry its request, using XPC to com.apple.fileproviderd.throttling-retry, which is submitted by DAS and registered with CTS. The following log entries are typical.

1.367044 fileproviderd ┳16f6 ✅ done executing <FS1 ⏳ fetch-metadata(docID(15239)) why:itemChangedInTree sched:utility#1708984681.334466 from:<start:1708984681 backoff:200ms0µs> ⧗throttling> [duration 13ms759µs]

1.367978 fileproviderd ⏳ FS: schedule throttling handling in 199ms2µs

1.368030 FileProviderDaemon [NOTICE] ⏱ com.apple.fileproviderd.throttling-retry: new watcher registered for c{48}2.FS

1.368041 FileProviderDaemon [NOTICE] ⏱ com.apple.fileproviderd.throttling-retry: registering xpc_activity

Then, just over 200 ms later:

1.573232 fileproviderd ⌛ FS: 1 throttles expired

1.573893 fileproviderd ┏1706 💡 trigger: throttleExpired(i:docID(15239) fetch-metadata)

1.575337 fileproviderd ┗1706

The only slightly concerning log entry I noticed was one referring to a throttle of 12 minutes:

3.246 com.apple.FileProvider ⏳ FP: schedule throttling handling in 11min59s

but that didn’t appear to have any adverse consequences.

Chunking and iCloud Drive limits

The most fruitful part of these explorations was insight gained into one of the oldest parts of iCloud, MMCS, which stands for MobileMe Chunking Service. In case you don’t remember it, MobileMe was first released under the brand name of iTools just over 24 years ago. Not only does its name live on in the name of this service within iCloud, but its configuration settings are also still listed in a MobileMe pathname.

Despite its ancient origins, MMCS is thoroughly modern, with support for APFS, including its sparse files. It appears to work on files to be uploaded to iCloud Drive in two separate phases, the first dividing their data up into chunks, and the second adding a signature and a 16-byte key to each chunk. The latter includes a short hash of the chunk contents.

Although MMCS doesn’t report the maximum size of chunks in the log, its does provide details for each file that it chunks. Current system limits are that chunk sizes of 28,455,742 bytes (28 MB) are allowed, and the fixed maximum chunk size is 33,554,432 bytes (33 MB). However, in practice preferred chunk size appears to be just over 15,350 bytes, considerably lower than either system maximum.

The MMCS system limits property list contains details of two other constraints that are applied, depending on the location of Apple’s iCloud servers. The first is connection.max.requests which can be unlimited on several servers, or may be restricted to 100. There’s also a set minimum throughput, ranging from 540 KB/s to just over 1,700 KB/s.

Summary of limits

Stages in transfers with iCloud Drive are subject to throttling, although throttles appear to occur infrequently and only last a few hundred milliseconds.

Single operations such as folder transfers are managed as a batch rather than a pipeline.

For transfer and storage in iCloud, files are divided into chunks of just over 15,350 bytes in size, although the maximum chunk size imposed by the system is either 28,455,742 bytes (28 MB), or a fixed maximum of 33,554,432 bytes (33 MB).

Some iCloud servers may impose a connection.max.requests of 100, although others are unlimited.

Set minimum throughput ranges between 540 KB/s to just over 1,700 KB/s, although faster rates should normally be obtained.