Is Apple lagging in AI?

As we’ve become increasingly besotted by the achievements and failings of Large Language Models (LLMs) like GPT-4 in ChatGPT, it has become fashionable to speculate that Apple has been left behind these recent developments in AI and Machine Learning (ML), and wonder when and how it might catch up. This article considers what Apple might have up its sleeve for macOS in the coming year or so.

Apple has declared that its greatest interest is in on-device ML and AI, citing its overriding concerns with privacy. While this is heartening, there are other good reasons that Apple doesn’t want to commit to large-scale off-device AI, which could only compete for bandwidth and servers with iCloud and other profitable services.

Apple’s products are already well-equipped to perform sophisticated ML tasks, unlike the hardware of most of its competitors. For instance, all iPhone models introduced since the iPhone 8 in September 2017, all iPads introduced since March 2020, all Apple silicon Macs, and the Apple Vision Pro have a neural engine (ANE) in their chip. Poorest-equipped are Intel Macs, although Apple’s engineers have surprised us with the amount of ML they have been able to run with only limited hardware support.

The last few years have seen the use of ML in a wide range of tasks across macOS, iPadOS and iOS. Some of the more familiar examples include:

Visual Look Up, local image analysis and recognition, with off-device reference data;

Live Text, on-device text recognition in images;

machine translation, on- or off-device;

natural language processing, on-device.

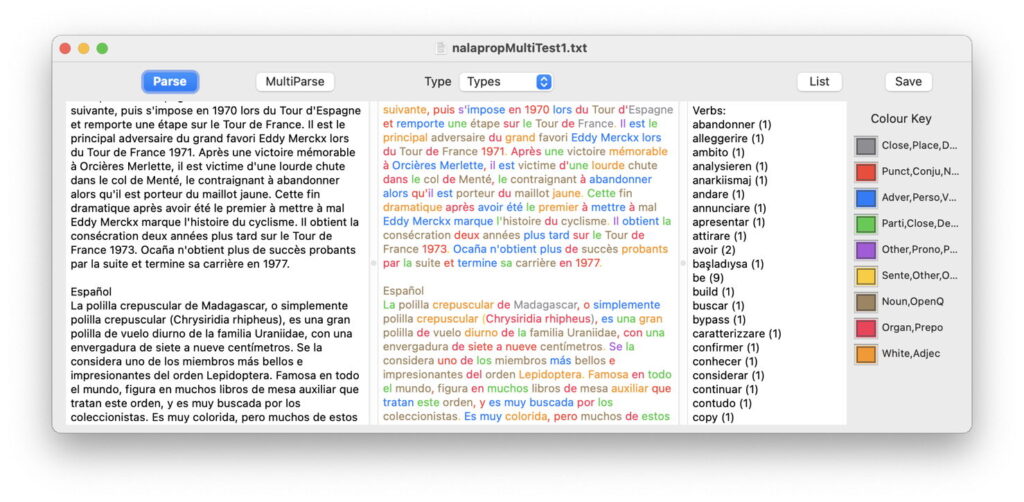

One simple illustration of these is in natural language processing, as shown in my app Nalaprop.

This recognises and analyses multiple languages in the same text document, here with French (upper paragraph) and Spanish (lower). Words are parsed into different parts of speech, and can be taken down to lemmas, word roots such as the verb be for is, are, was, etc. Currently this supports half a dozen languages in full, and recognises most others. Nalaprop doesn’t have to work any magic with neural networks or ML to deliver this, as it’s all built into an accessible API.

Although off-device translation is widely available, Apple’s operating systems also offer on-device translation, to ensure that no user content leaves that Mac or device.

Other examples of ML in use on Apple devices include motion activity models, gait analysis, hand gestures for the Apple Vision Pro, and further improvements in word and sentence completion.

Commentators appear to have been surprised at a recent paper published online in arXiv, although this is but one of many that provide signs of Apple’s interests, and the productivity of its AI/ML teams. Recent major papers include:

arXiv:2310.07704v1 October 2023, describing the Ferret multimodal large language model (MLLM) that understands spatial references in images and their verbal descriptions, for spatial understanding;

arXiv:2309.17102v2 February 2024, describing instruction-based image editing enhanced by an MLLM;

arXiv:2306.07952v3 March 2024, introducing MOFI, a vision foundation model to learn image representations from noisy annotated images, with a constructed dataset of 1.1 billion images with 2.1 million entities, based on a web corpus of 8.4 billion image-text pairs;

arXiv:2403.09611v1 March 2024, detailing the design and implementation of MM1, a family of MLLMs for multi-image reasoning, demonstrated using captioning and visual questioning tasks. These can, for example, count the number of beers on a table, read the prices from an image of the menu, and calculate their total cost.

The emphasis in this recently published work is on the integration of images with the text of LLMs to form multimodal large language models (MLLMs) that can be used to reason about the content of images. Although this research uses very large corpora and datasets in training, it appears aimed at delivery for on-device use.

While LLMs like GPT-4 in ChatGPT have been grabbing the limelight, researchers working in and with Apple appear to have been pursuing goals that are of greater relevance to Apple’s computers and devices, including its Vision Pro. Hopefully WWDC this coming June should bring more detailed announcements about the fruit of all this research.