Multitasking, parallel processing, and concurrency in Swift

It has been a very long time since computers have executed code in a simple, linear manner. Even back in the 1950s, most used a system of interrupts or traps to handle errors, input/output, and other features, although those weren’t considered to be multitasking.

In computers with a single processor core, multitasking was a way of cheating to give the impression that the processor was doing several things at once, when in fact all it was doing was switching rapidly between two or more different programs. There are two fundamental models for doing that:

cooperative multitasking, in which individual tasks yield to give others processing time;

preemptive multitasking, in which a scheduler switches between tasks at regular intervals.

When a processor switches from one task to the next, the current task state must be saved so that it can be resumed later. Once that’s complete, the next task is loaded to complete what’s known as a context switch. That incurs overhead, both in terms of processing and in memory storage. Inevitably, switching between lightweight tasks has less overhead. There have been different strategies adopted to determine the optimum size of tasks and overhead imposed by context switching, and terminology differs between them, variously using words such as processes, threads and even fibres, which can prove thoroughly confusing.

Preemptive multitasking was championed on larger computers and in Unix, but was seldom attempted in personal computers until the mid-1980s. When the Macintosh was released forty years ago, its Motorola 68000 processor had insufficient memory and was short of computing power to make it a good candidate for multitasking. Andy Hertzfeld wrote Switcher for Classic Mac OS in 1984-85 to enable the user to switch between apps, and that led to MultiFinder in 1987, bringing cooperative multitasking.

At the same time, Apple developed a variant of the Macintosh II with a Paged Memory Management Unit (PMMU) to support virtual memory, and in February 1988 it released the first Unix for Macintosh, A/UX, complete with preemptive multitasking. But Mac OS didn’t gain true preemptive multitasking until the release of Mac OS X 12 years later.

Cooperative multitasking worked well much of the time, but let badly behaved tasks hog the processor and block other tasks from getting their fair share. It was greatly aided by the main event loop at the heart of Mac apps that waited for control input to direct the app to perform work for the user. If an app charged off to spend many seconds tackling a demanding task, without polling the main event loop, that app could lock the user out for what seemed like an age.

As IBM was developing its first Personal Computer, and Apple its first Macintosh, on the other side of the Atlantic in England two engineers, David May and Robert Milne, were developing an alternative approach to computing based on a parallel architecture of multiple high-speed processors connected by high-speed serial links, the Transputer. In 1985, their company Inmos released its first usable chip, the T414 Transputer, a 32-bit parallel processor with 2 KB of on-board memory and running at up to 20 MHz, with four serial links at up to 20 Mb/s.

Two years later, in 1987, their second-generation chip, the T800, started shipping in small quantities and at high cost because of initial low yields. Its major advance was a 64-bit floating-point unit implementing the new IEEE 7540-1985 standard for floating-point arithmetic. This also had a whole 4 KB of on-chip memory and could run at up to 25 MHz when you were lucky. For comparison, the single 68020 CPU in a Macintosh II of the same year ran at 16 MHz, and had a separate 68881 floating-point unit.

The Transputer wasn’t envisaged to be the sole processor type in a computer. Demonstration designs almost invariably interfaced them with an IBM PC, as an array of Transputers used as co-processors for computationally intensive tasks, of which the most popular was computing Mandelbrot sets for on-screen display. Matching the number of squares within an image to the number of T800 chips in an array enabled companies such as Meiko Scientific, Parsytec and Microway to enthuse potential customers. By the autumn of 1987, several major PC manufacturers were developing accelerator boards such as Commodore’s for its Amiga A2000, which relied on a Motorola 68000 as the main CPU.

Perhaps the biggest challenge for those intending to use Transputers was software support for these large farms of cores. David May and others at Inmos enlisted the help of Tony Hoare to develop a parallel programming language, occam (perversely named with a small o), forming the software heart of the Transputer Development System, TDS, inevitably nicknamed tedious. occam was different in that it required sequential instructions to be written explicitly, such as

SEQ

x := x + 10.5

y := x – 5.5

while those to be run in parallel were marked as

PAR

p()

q()

Because of occam’s structure, its bundled code editor was probably the first to fold sections of code away to reveal its structure, in its spectacular folding editor. This took a similar approach to that now available in BBEdit when working with XML and similar documents.

By 1990, the Transputer was falling behind mainstream processor development, but it provided extensive experience in running code in parallel across multiple processor cores that was later used in the design of computer clusters. Between 2003-2006, Apple shipped thousands of Xserves with PowerPC G4 and G5 processors to build supercomputer clusters.

Apple’s first Macs with dual processors came in 2000, with PowerPC 7400 (G4) chips in Power Mac G4 desktop systems. In 2005, the Power Mac G5 was the first Mac to use dual-core PowerPC G5 processors, then the iMac 17-inch of 2006 used Apple’s first Intel Core Duo processor with two cores.

Since Apple has been switching architecture to its own chips from 2020, every Mac comes with multiple CPU cores of two different types, and runs macOS with its support for both preemptive and cooperative multitasking. What we have now, though, is the inverse of where this all started, multiple processor cores on which threads are really executing simultaneously, and have no need to pretend. For those, Swift 6 supports structured concurrency to address many of the problems encountered in parallel processing and cooperative multitasking.

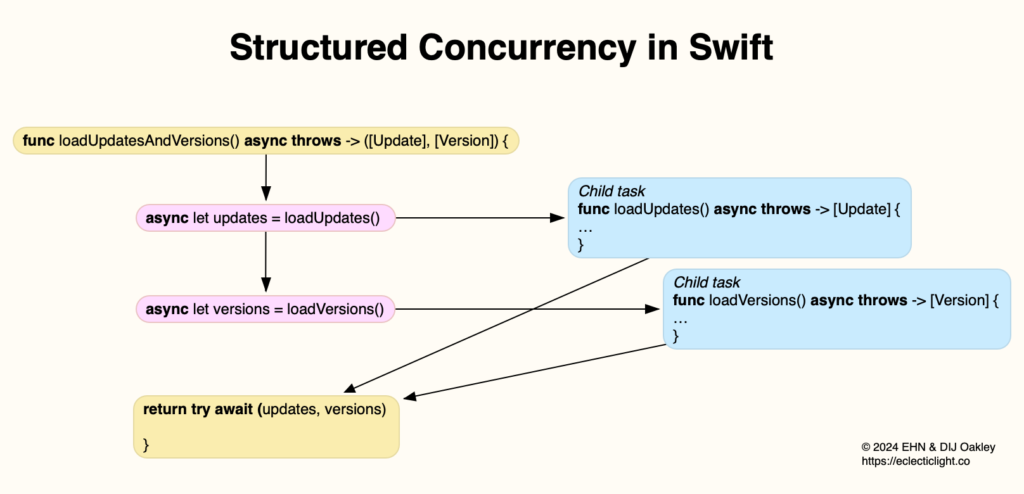

For the sake of example, assume an app needs to access two types of remote information stored in Property Lists on a server somewhere. One type it parses into a list of Updates, the other into a list of Versions. Because those have to be fetched over the network, building those lists could take some seconds, and on occasion might fail altogether. One way to tackle this is with a function loadUpdatesAndVersions() shown below.

This first calls a child task asynchronously, which tries to load the Updates (shown in blue). As soon as that task is running, the main function calls a similar child task to load the Versions (blue). The main task is then suspended as it awaits the lists of Updates and Versions from those child tasks, in the last line of code, which returns the two lists to the rest of the app.

This may appear trivially simple, but building it into the Swift language enables replacement of a lot of contorted code that had been used previously.

In 40 years we have progressed from multitasking of single processors, and massively parallel computing, to cooperative multitasking of multiple cores. It has already been quite a journey.