ChatGPT outperforms undergrads in intro-level courses, falls short later

Enlarge (credit: Caiaimage/Chris Ryan)

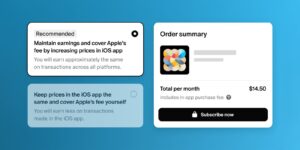

“Since the rise of large language models like ChatGPT there have been lots of anecdotal reports about students submitting AI-generated work as their exam assignments and getting good grades. So, we stress-tested our university’s examination system against AI cheating in a controlled experiment,” says Peter Scarfe, a researcher at the School of Psychology and Clinical Language Sciences at the University of Reading.

His team created over 30 fake psychology student accounts and used them to submit ChatGPT-4-produced answers to examination questions. The anecdotal reports were true—the AI use went largely undetected, and, on average, ChatGPT scored better than human students.

Rules of engagement

Scarfe’s team submitted AI-generated work in five undergraduate modules, covering classes needed during all three years of study for a bachelor’s degree in psychology. The assignments were either 200-word answers to short questions or more elaborate essays, roughly 1,500 words long. “The markers of the exams didn’t know about the experiment. In a way, participants in the study didn’t know they were participating in the study, but we’ve got necessary permissions to go ahead with that”, Scarfe claims.