Last Week on My Mac: Performance by design

As the UK enters the last few days before its general election, and the US prepares to elect its president in November, we’re getting accustomed to ambitious claims of what potential governments and presidents will do. This week I’ve been examining those made for what Swift 6 terms concurrency, the combination of asynchronous and parallel code. To quote from the language documentation:

“Swift has built-in support for writing asynchronous and parallel code in a structured way. Asynchronous code can be suspended and resumed later, although only one piece of the program executes at a time.” “Parallel code means multiple pieces of code run simultaneously — for example, a computer with a four-core processor can run four pieces of code at the same time, with each core carrying out one of the tasks.”

Although I’m impressed with Swift’s support for asynchronous code, I’ve so far been unable to discover any equivalent for running parallel code on multiple cores, and I’m concerned at their conflation in the term concurrency.

Running asynchronous tasks using cooperative multitasking is ideal for code that takes time to complete because it has to wait for external delays, typically fetching data from a remote source. Those tasks impose little local CPU core load, so their suspension allows other tasks in the same thread to proceed and make better use of the core. Swift 6 makes this easy to write, read and debug using async/await.

Running threads in parallel on multiple cores is best-suited to code that imposes substantial CPU core load as it’s computationally intensive. There’s little or no advantage to running that using multiple tasks within the same thread, but dividing work across several cores at once should enable its completion proportionately faster.

These differences are illustrated in two charts obtained from Xcode’s Instruments on the same CPU-intensive code.

In the first, four threads are run at high Quality of Service (QoS) using Dispatch, inviting macOS to schedule each to run on a different core. Although the threads are periodically relocated between cores, completing the whole task takes a quarter of the time it would take on a single core, thanks to multithreading.

When the same tasks are run asynchronously in the same thread using multitasking, there’s no benefit to performance, as all four run together and take four times the period that they would when divided up and run on four cores.

Accounts of concurrency in Swift all too often use timers as illustrations, despite their being atypical. Run four synchronous tasks each consisting of a timed wait for one second, and that sequence will take a total of four seconds to complete. Whether you use multithreading or cooperative multitasking, the four will then complete in a total of one second as their timers run concurrently not consecutively.

Timers aren’t a bad model for most I/O tasks, provided that delays resulting in task suspension occur externally. But for CPU-intensive code they break down: four tasks that would complete in one second when each runs alone on a core can’t complete in one second when run asynchronously on a single core, where they take a total of four seconds, assuming all cores are similar and run at the same frequency and active residency.

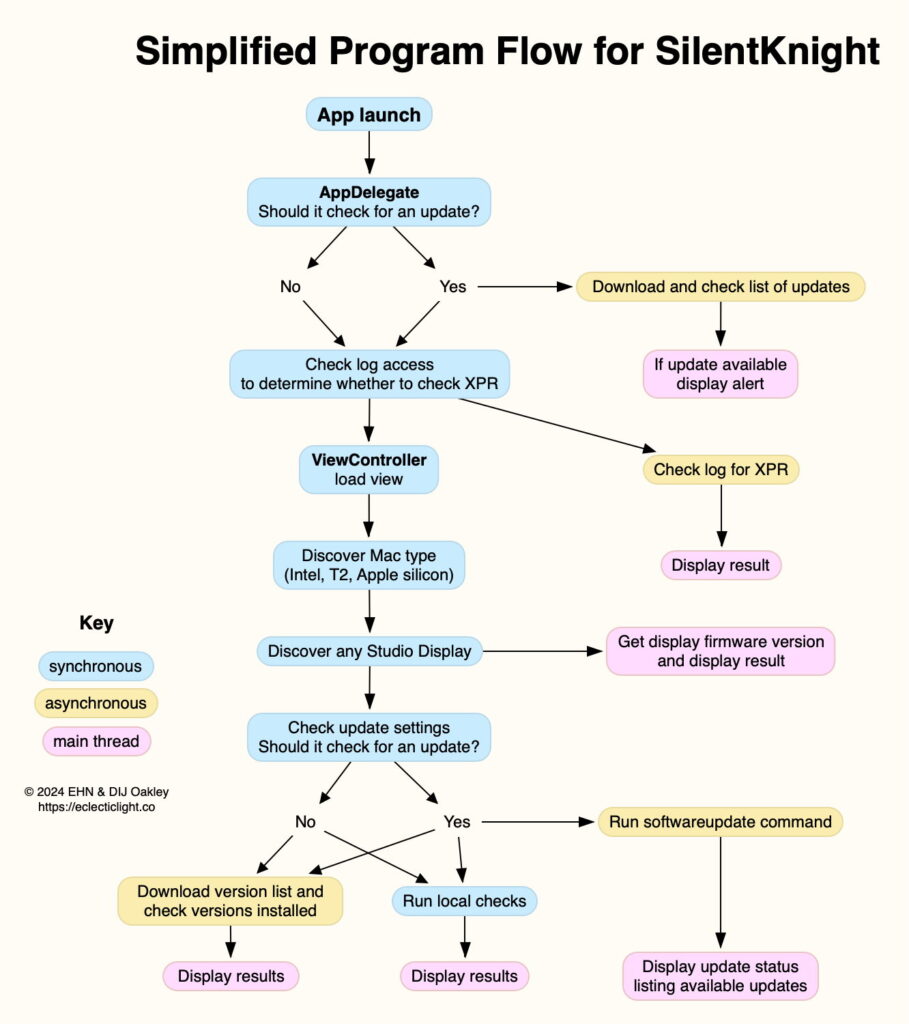

In reality, many apps are more complex and require careful design, and decisions on the most appropriate use of multithreading and multitasking. Even a simple app like SilentKnight merits thought and experiment.

Following launch, SilentKnight runs as a central sequence of synchronous tasks, four of which can branch asynchronously. Two of those rely on downloading property lists from my GitHub, classic instances of I/O tasks ideal for multitasking, but which can also be run in separate threads if you prefer.

The other two asynchronous tasks aren’t as simple: one runs the softwareupdate command in a separate thread, and reports its result, while the other extracts log entries for XPR reports, involving a delay waiting for results from a command tool, and is then locally computationally intensive. Both are run in their own threads using Dispatch, and wouldn’t fare at all well in cooperative multitasking. You can see the effects of this program flow when you first launch SilentKnight: local checks are normally reported first, followed slightly later by the two others, from XPR and softwareupdate.

Judging whether multitasking or multithreading is more appropriate isn’t something a compiler’s static analysis can get right, as external delays and local computational load are unpredictable. Thus the choice between running a task asynchronously in the same thread, and farming it out to run in parallel on another core, has to be made by design.

Making best use of the two types of CPU core in Apple silicon, running multiple threads in parallel, and multitasking in a single thread remain human tasks, requiring insight, experience and often some experimentation. While async/await is a big step forward, it doesn’t appear to be the landslide victory our politicians might wish for.