Everything Apple Intelligence can (and can’t) do in iOS 18.1

In an unprecedented move, Apple released the iOS 18.1 beta more than a month before the release of iOS 18. The company is running two betas in parallel—iOS 18 for all devices, and iOS 18.1, iPadOS 18.1, and macOS 15.1 for devices capable of running Apple Intelligence (iPhone 15 Pro and Pro Max, M-series iPads, and M-series Macs).

It’s been clear since WWDC in June that Apple Intelligence is coming in hot, with a phased rollout of features. Now, the timeline has changed, with none of the features coming in the iOS 18 release, some coming in iOS 18.1, some coming later this year, and some coming early in 2025. If you have a device capable of running it, here are the Apple Intelligence features you’ll find in iOS 18.1, and how they work in the current beta release.

Updated August 28: The third beta of iOS 18.1 is out now and it adds the new Clean Up editing tool in Photos, which lets you remove unwanted objects from your photos.

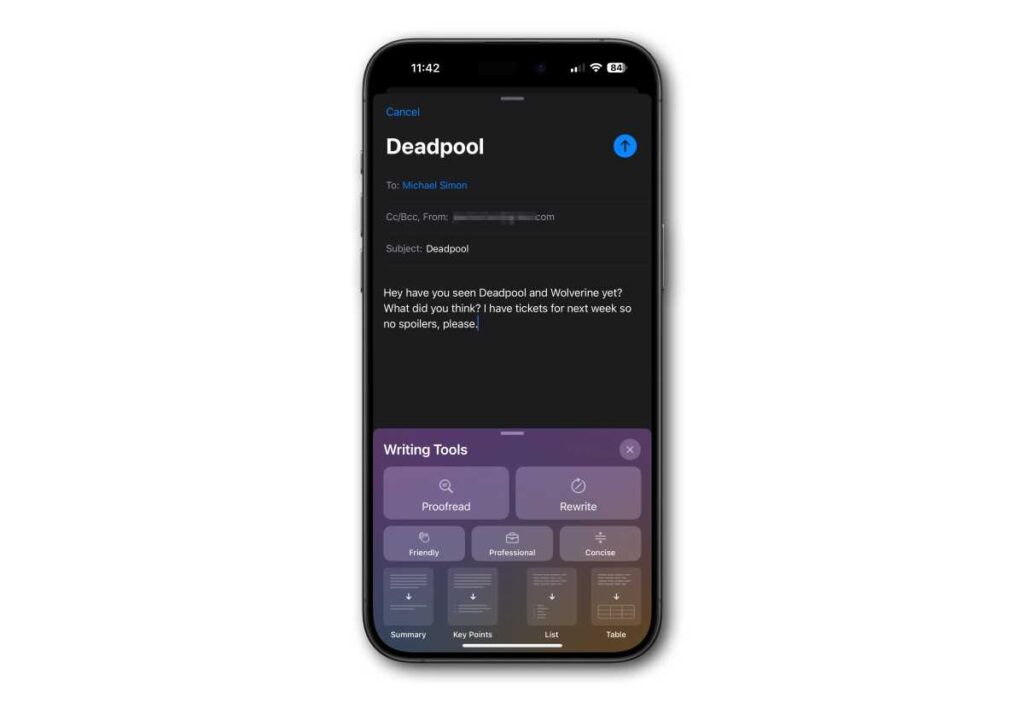

Writing tools

Anywhere you can select and copy/paste text, you can access a new writing tools menu. Select your text and then in the copy/paste popup menu, select Writing Tools (you may have to select the > arrow to see more options).

Apple Intelligence can take the select text and proofread it, highlighting changes in grammar or punctuation and suggesting changes, or simply re-writing it to make it more friendly and casual, more professional, or more concise.

For longer text, the tool can create a summary, a bullet list of key points, or generate lists or tables from raw text.

Foundry

Improved Siri

With iOS 18.1 you’ll notice a big change in how Siri looks but a much smaller change in how Siri works. Invoking Siri on your iPhone or iPad makes a colorful glow around the edges of your phone that reacts to your voice while you talk. You can also double-tap the bottom edge of your phone to bring up a keyboard and type to Siri.

But the boost in capabilities is limited. Siri better understands speech with “ums” and “ahs” in it, or quickly correcting yourself, and has a better sense of what the context of the previous command was so you can make more natural follow-up commands.

The big boost in Siri capabilities you saw at WWDC, with Siri having a sort of contextual knowledge of you as an individual and taking actions within apps, is coming later. The personal context update may come before the end of the year, but in-app interactivity and onscreen awareness isn’t coming until 2025.

At the top of each email or email thread is a “Summarize” button that can quickly tell you what an email is about, or an entire email thread.

Mail can also optionally show priority emails at the top of your inbox, and your inbox view will also show a brief summary of the email instead of the first couple of lines of the body. Those summaries will also appear in notifications on the lock screen or notification shade.

Foundry

Messages

When in a text conversation with someone, the keyboard will show smart reply suggestions—this seems to be limited to new conversations and only while you receive them…if you jump into an old text conversation, you just get the normal keyboard word suggestions.

New unread texts—and multiple texts from the same person—are summarized much like Mail messages are. In your Messages list, you see a short summary instead of the start of the most recent message, and the same is true of notifications on the Lock screen and Notifications shade.

Safari summaries

When in Safari’s Reader view, you’ll notice a new “Summarize” option at the top of the page that can create a one-paragraph summary of what’s on the page.

Call recording

Call recording is now built into the Phone app and Notes. Simply tap the call record button in the upper left of the call screen. A short message will play to let both sides know the call is being recorded. When the call is over, you’ll find the recording saved as a voice note in Notes, along with an AI-generated transcript.

Foundry

Reduce Interruptions Focus

The new Reduce Interruptions focus mode is present in iOS 18.1. It uses on-device intelligence to look at notifications and incoming calls or texts, letting through those that it thinks may be important and silencing the rest (they still arrive, just silenced).

Any people or apps that you have set to always allow or never allow will still follow those rules, however. You’ll find it with all the other Focus modes, in Settings > Focus or by tapping Focus in Control Center.

Foundry

Photos

Photos is getting a big interface overhaul in iOS 18, and it’s getting mixed reviews among early testers. The new AI features coming in iOS 18.1 are sure to please, though.

Natural language works in search, so you can look for people, places, and things in your photos in a casual way. An enhanced search will find specific moments in videos, too. You can also create Memories with natural language, and then tweak them in Memory maker.

Just note: The app may need to index your photo library overnight before these advanced natural language search tools work properly.

Foundry

Clean Up

With the third beta of iOS 18.1 (and macOS 15.1), the Clean Up editing tool has been added to the Photos app. Tap the edit button on a photo, then the Clean Up tool. You can circle or paint over an area of a photo and after a few minutes, Apple Intelligence will remove it, trying to match the rest of the background.

Features coming in iOS 18.2 or 18.3

Once this first wave of Apple Intelligence features reaches everyone in iOS 18.1, we won’t have long to wait for the next set of improvements. There are more features due by the end of the year—probably in iOS 18.2 but possibly in iOS 18.3.

Most notably, the image generation features that were expected with the initial release now appear to be coming in iOS 18.2 or 18.3, too. That includes the Image Playground app for experimenting with AI image generation in a handful of styles, and Genmoji, for creating Memoji-inspired images of people.

The delayed features also include the much-vaunted ChatGPT integration. If you want to actually generate bodies of text and not just rewrite them, you’ll want that. It also will give Siri some new capabilities as it can hand off (with permission) questions and requests to ChatGPT.

Features coming in iOS 18.4 next year

If you’re looking forward to a much more powerful and capable Siri, you’ll be waiting until the spring of 2025. Those features are currently being targeted at iOS 18.4, it is said, which will enter beta at the start of the year and likely release sometime around March.

This is when Siri will have the ability to “see” what is on your screen and act accordingly, and perhaps most importantly when it will be able to take lots of actions within apps. This requires a massive expansion of “app intents” (hooks that developers use to integrate with Siri), which will necessitate lots of testing and app updates.

Siri will also get the ability to build a semantic index of your personal context. So it understands things like familial relationships, frequently visited locations, and more. It is unclear at this time if that feature will come by the end of this year or be a part of the big spring Siri update.