Last Week on My Mac: Mac mini M4 Pro first impressions, cores and more

My Mac mini M4 Pro arrived four days early, and swiftly exceeded all expectations. Everyone who has seen this new design remarks on how tiny it is, and I still keep looking at it, wondering how the smallest Mac I have ever owned is also by far the fastest. For those who collect numbers, it matched the growing collection in Geekbench’s database, with a single-core score of 3,892, a multi-core of 22,706, and Metal GPU of 110,960. Of course those new MacBook Pros with M4 Max chips are doing better with their extra two CPU cores and twice the number of GPU cores, but second best is still far superior to anything I’ve run before.

Not only is this my fastest Mac ever, but it was also the quickest and simplest to commission. It replaces my Mac Studio M1 Max, and now sits under my Studio Display using the Studio’s old keyboard and trackpad. As I’ll explain in a future article, I opted to migrate to the mini during initial setup using the Studio’s backup SSD, a sleek OWC Envoy Pro SX connected by Thunderbolt 3. Including the inevitable macOS update, the whole process took less than two hours, from the start of unboxing to the first full Time Machine backup.

With 48 GB memory and an internal SSD of 2 TB, I was keen to benchmark that once its initial backup was done. Although I have reduced confidence in these figures, there’s no doubt that this SSD is significantly quicker than the 2 TB in the Studio; how much quicker is open to debate. AmorphousDiskMark reported a write speed of 7.7 GB/s, while my own Stibium gave 10.3 GB/s across a broad range of file sizes. There was closer agreement on read speed, at around 6.8 GB/s. Both of those are comfortably above what I expect when I can finally get hold of an external Thunderbolt 5 SSD.

CPU cores

My M4 Pro is the full-performance variant, with 10 P and 4 E cores, set in three clusters: an E cluster with all four E cores, and two clusters of five P cores. Since its M3 chips, Apple has increased the maximum number of cores in a cluster from 4 (in M1 and M2 chips) to 6 (M3 and M4).

M4 P cores run at frequencies between 1260 and 4512 MHz (1.3-4.5 GHz), their maximum frequency being 111% that of the M3 P core, and 140% that of the M1 P core. M4 E cores have a narrower range of frequencies than those in the M3, between 1020-2592 MHz (1.0-2.6 GHz), so running at 137% of M3 frequency when running low QoS threads, and 94% of M3 when running at maximum frequency for high QoS threads spilt over from P cores. Those are going to make comparisons between E core performance very interesting indeed.

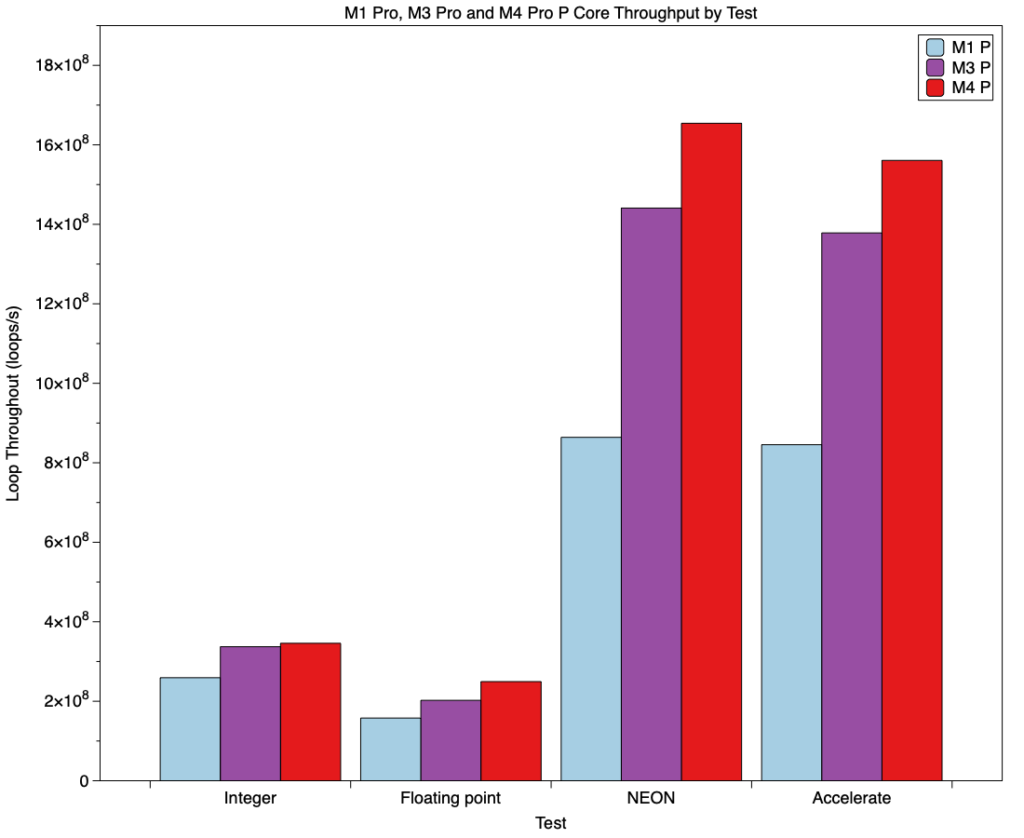

Single-thread and single-core comparisons of P core throughput are inevitably in the M4’s favour, with significantly better performance across integer, floating point and vector performance, as shown in the chart below.

The Y axis here gives loop throughput per second for my four basic in-core performance tests, a tight assembly code integer math loop, another tight assembly code loop of floating point math, NEON vector processor assembly code, and a tight loop calling an Accelerate routine run in the NEON unit. Pale blue bars are results for the M1, purple for the M3, and red for the shiny new M4.

Although improvements in integer and floating point performance might appear small here, these results are for single threads. When scaled up across more P cores, the differences are magnified, as shown in the regressions below.

This plots total times taken to execute multiple threads, each consisting of 10^9 (one thousand million, or one billion) loops of floating point assembly code, against the number of threads, the same as the number of cores used, as each core runs one thread. According to the fitted linear regression equations, each thread/core of 10^9 loops takes the same period: 6.04 seconds for the M3 P cores, and 4.69 for the M4. Those equate to 1.66 x 10^8 loops/second per thread for the M3, and 2.13 x 10^8 for the M4. On that basis, the M4 performs at 128% that of the M3, significantly better than expected by the 111% increase in maximum core frequency. That requires the M4 to have improved processing speed for the same frequency of the M3, a frequency-independent improvement.

Core allocation

One striking difference between M4 CPU cores and those of previous Apple silicon chips is their pattern of core allocation, something you may notice even in Activity Monitor, for all its faults. This is best illustrated in its CPU History window.

For these two series of tests, I waited until the Mac was idling quietly, with little happening on its CPU cores. I then ran, in rapid succession, a series of my in-core floating point tests of 10^9 loops at high QoS, starting with a single thread, then two, and so on up to six or eight threads. This results in a pattern distinct from anything you’ll see in Intel cores, as I have shown here since the early days of the M1.

This is the series seen on my MacBook Pro M3 Pro running macOS 15.2 beta, and is typical of M1, M2 and M3 chips. Although the cores are out of order here, these are the six cores in the chip’s single P cluster. At the left is the obvious peak from 1 thread running on Core 9, then the 2 threads of the next test appear on cores 9 and 12. Three fully occupy 7, 9 and 12, with a little spilt onto 8, and so on until all six are fully occupied with 6 threads.

Activity Monitor is a little too crude to see how distinct this is, for which you have to resort to shorter sampling periods of 0.1 second, and the finer detail of active residency reported by powermetrics. Those confirm that, much of the time, these heavy in-core compute loads run in a single thread on a single core, and if they are moved around, it’s relatively infrequently. E cores are different, though.

Compare that with a similar sequence, this time for 1-8 threads, on the M4 Pro. Its ten P cores are divided into two clusters, which I’ve separated here with the blue line, although within each cluster the cores aren’t in numeric order.

Reading again from the left, a single thread is run in cores 6 and 8, with a little in 14 in the second cluster. Two threads are run in all five cores of the second cluster, with a little at the end from those of the first cluster. Three threads are similar, with significant contributions from all ten cores, and from then on they are similar.

So, in the M4 P cores threads are no longer allocated to single cores, and it appears from the CPU History window that they may even be allocated across more than one cluster. In fact, when the detailed active residency and frequency data from powermetrics are used, while threads are often moved between two cores in the same cluster, macOS will normally avoid unnecessarily running threads on other clusters, although it will happily move all threads from one cluster to another.

The rationale behind running all threads within the same cluster is clear, as CPU core frequencies of all cores in the same cluster are the same. Constraining threads to a single cluster thus allows others to idle and use less energy. While that continues in M4 chips, it isn’t clear why threads are moved between cores so frequently, or moved together to another cluster, and whether that brings improvements in performance.