Inside M4 chips: CPU power, energy and mystery

Few comparisons or benchmarks for M-series chips take into account the reason for equipping Apple silicon chips with more than one CPU core type, according to Arm’s big.LITTLE architecture. Measuring single- or multi-core performance ignores the purpose of E cores, and estimating overall power use can’t compare those core types. This article tries to estimate the cost in terms of power and energy of running identical tests on M4 P and E cores, and thereby provide insight into some of the most distinctive features of Apple silicon, and their benefits.

Methods

To run these two in-core performance tests I use a GUI app wrapped around a series of loading tests designed to enable the CPU core to execute that code as fast as possible, and with as few extraneous influences as possible. Both tests used here are written in assembly code, and aren’t intended to be purposeful in any way, nor to represent anything that real-world code might run. Those are:

64-bit floating point arithmetic, including an FMADD instruction to multiply and add, and FSUB, FDIV and FADD for subtraction, division and addition;

32-bit 4-lane dot-product vector arithmetic (NEON), including FMUL, two FADDP and a FADD instruction;

Source code of the loops is given in the Appendix.

The GUI app sets the number of loops to be performed, and the number of threads to be run. Each set of loops is then put into the same Grand Central Dispatch queue for execution, at a Quality of Service of the maximum of 33. That ensures they are run preferentially on P cores, but will spill over to E cores when no P core is available, when more than 10 threads are run concurrently. Timing of thread execution is performed using Mach Absolute Time, and the time for each thread to be executed is displayed at the end of the tests.

For these tests, the total number of loops to be executed in each thread was set at 5 x 10^8 for floating point, and 3.5 x 10^9 for NEON. Those values were chosen to take 2-3 seconds per thread, to ensure the whole test period was available for analysis.

Immediately before running each test, I launch powermetrics from the command line, to gather core power and performance data in sampling periods of 0.1 second for a total of 50 samples. Its output is piped into a text file, which is then analysed using Numbers and DataGraph. All tests were conducted on a Mac mini M4 Pro with 10 P and 4 E cores, running macOS 15.1.1 in standard power mode.

Each test was inspected individually, and seen to contain the following phases:

small initial activity resulting from bringing the GUI app into focus, and clicking the Run button;

a brief period of low activity, typically with total CPU power at below 50 mW;

1-2 sample periods when threads are loaded onto the cores;

15-21 sample periods when threads are run, whose total CPU power measurements are collected for analysis;

1-2 sample periods when threads are unloaded;

a return to low activity, typically with total CPU power returning below 50 mW.

Means and standard deviations were then calculated for each series of power measurements, and pooled with times taken to execute threads.

Power used by thread

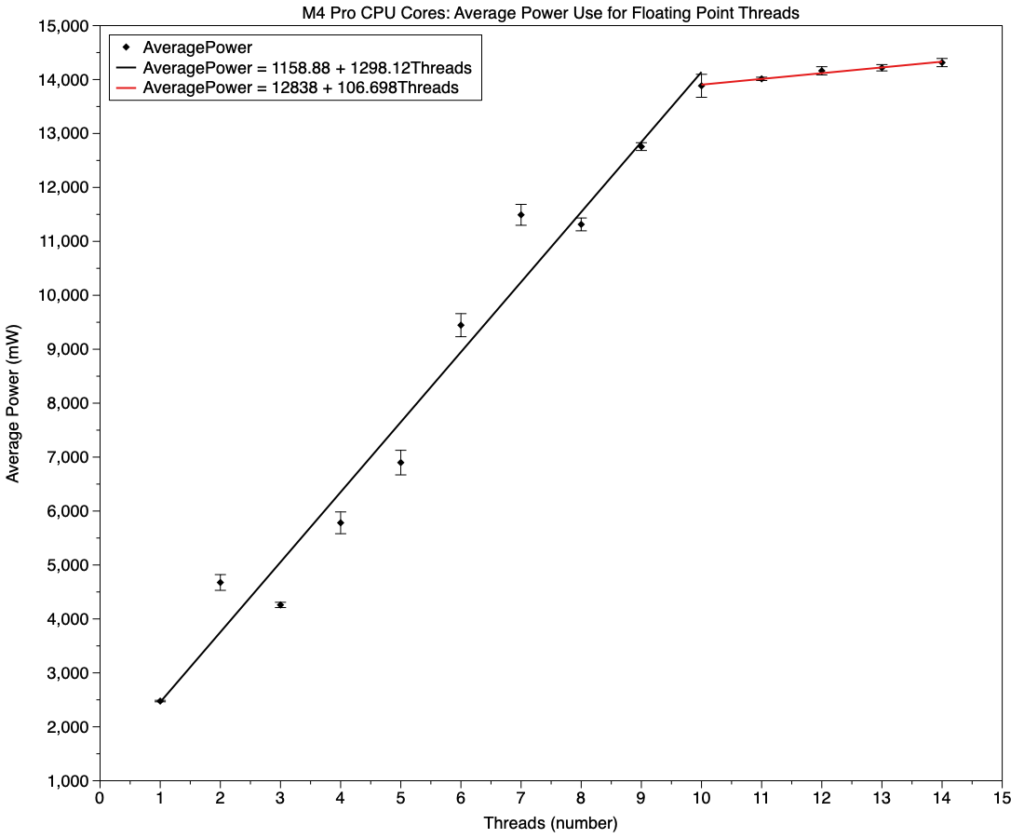

The first pair of graphs shows average power use for the number of threads run, shown here with error bars giving the range of +1 standard deviation. These show two sections: for 1-10 threads, when all were running on P cores, and for 11-14 threads, when the 10 P cores were fully committed and 1-4 threads spilt over to run on E cores at their maximum frequency. Maximum power used during testing was just short of 34 W.

That for the floating point test above, and NEON below, have regression lines fitted, indicating that:

Each additional floating point thread required 1,300 mW on P cores, and 110 mW on E cores.

Each additional NEON thread required 3,000 mW on P cores, and 280 mW on E cores.

P cores thus required 11-12 times the power of E cores, or E cores used 8-9% of the power of P cores.

Although linear regressions aren’t a bad fit, there’s consistent deviation from the linear relationship seen in previous analyses on M1 and M3 cores. More remarkably, the pattern of deviation is identical between these two tests, although they run in different units in these cores. In both cases, power use was high for 2 and 7 threads, while that for 3 and 8 threads was slightly lower. The only unusual pattern seen in powermetrics output was that, when running 2 and 7 threads, thread mobility was much higher than in other tests.

Previous tests on M1 and M3 P cores found that each additional floating point thread run on those requires about 935 mW, indicating a substantial increase in power used by M4 P cores when running at their higher maximum frequency. E cores in an M1 Pro require about 100 mW each when running at maximum frequency, similar to those in the M4.

Execution time

As power is the rate of energy use over time, the next step is to examine total execution time for all the threads running concurrently, which should form a linear relationship with different gradients for P and E cores. The next two graphs demonstrate that.

For both floating point (above) and NEON (below), there’s a tight linear relationship between total execution time and numbers of threads. Floating point demonstrates that each thread costs 2.4 seconds on P cores and 3.6 seconds on E cores, making E core execution time 150% that of P cores. NEON is similar, at 2.5 seconds on P cores and 3.4 seconds on E cores, for a ratio of 136%.

Time taken for the slowest thread to complete execution shows interesting finer detail.

For both tests, performance falls into several sections according to the number of threads run. With less than 5 threads run, there’s a sharp rise in time taken per thread. From 5-10 threads, time required remains constant, before increasing from 10-14 threads, when additional threads are spilt over onto E cores.

This has implications for anyone trying to measure core performance, as it demonstrates that a single thread can run disproportionately fast, compared with 3-10 threads. Basing any conclusion or comparison on a single thread completing in little more than 2 seconds, when 5 concurrent threads would take 2.34 seconds, 117% of the single thread, could be misleading.

Energy use

Although power use determines heat production, so is an important factor in determining cooling requirements, total energy required to execute threads is equally important for Macs running from battery. Simply reducing core frequency will reduce power used, but by extending the time taken to complete tasks, it may have no effect on energy used, and battery endurance. My final two graphs therefore show estimated total energy used when running test threads on P and E cores, the ultimate test of any big.LITTLE CPU design such as that in the M4.

Graphs for floating point (above) and NEON (below) are inevitably similar in form to those for power, with a near-linear section from 1-10 cores, when the threads are run only on P cores, and from 11-14 cores when they also spill over to E cores.

Fitted regression lines provide the energy cost for each additional thread:

For floating point, each thread run on a P core costs 3.1 J, and for an E core 1.5 J, making the energy used by an E core 47% that of a P core.

For NEON, P cores cost 7.7 J per thread, and E cores 3.0 J, making the energy used by an E core 38% that of a P core.

It’s important to remember that the E cores here aren’t being run at frequencies for high efficiency, but at their maximum so they can substitute for the P cores that are already in use.

Considering the small deviations from those linear relationships, it appears that running 2, 6 or 7 threads on P cores requires slightly more energy than predicted from the regression lines shown.

Unfortunately, assessing the energy used by E cores running at low frequencies, as they normally do when performing background tasks, is fraught with inaccuracies due to their low power use. My previous estimate for floating point tests is that a slow-running E core uses less than 45 mW per thread, and for the same task requires about 7% of the energy used by a P core running at maximum frequency, but I have lower confidence in the accuracy of those figures than in those above for higher frequencies.

Key information

When running the same code at maximum frequency, E cores used 8-9% of the power of P cores.

Power use when running 2 or 7 threads was anomalously high, possibly due to high thread mobility.

Execution on E cores was significantly slower than on P cores, at 136-150% of the time required on P cores.

Single-core performance measurements may not be accurate reflections of performance on multiple cores.

When running the same code at maximum frequency, energy used by an E core is expected to be 38-47% that of a P core.

Previous articles

Inside M4 chips: P cores

Inside M4 chips: P cores hosting a VM

Inside M4 chips: E and P cores

Inside M4 chips: CPU core performance

Appendix: Source code

_fpfmadd:

STR LR, [SP, #-16]!

MOV X4, X0

ADD X4, X4, #1

FMOV D4, D0

FMOV D5, D1

FMOV D6, D2

LDR D7, INC_DOUBLE

fp_while_loop:

SUBS X4, X4, #1

B.EQ fp_while_done

FMADD D0, D4, D5, D6

FSUB D0, D0, D6

FDIV D4, D0, D5

FADD D4, D4, D7

B fp_while_loop

fp_while_done:

FMOV D0, D4

LDR LR, [SP], #16

RET

_neondotprod:

STR LR, [SP, #-16]!

LDP Q2, Q3, [X0]

FADD V4.4S, V2.4S, V2.4S

MOV X4, X1

ADD X4, X4, #1

dp_while_loop:

SUBS X4, X4, #1

B.EQ dp_while_done

FMUL V1.4S, V2.4S, V3.4S

FADDP V0.4S, V1.4S, V1.4S

FADDP V0.4S, V0.4S, V0.4S

FADD V2.4S, V2.4S, V4.4S

B dp_while_loop

dp_while_done:

FMOV S0, S2

LDR LR, [SP], #16

RET