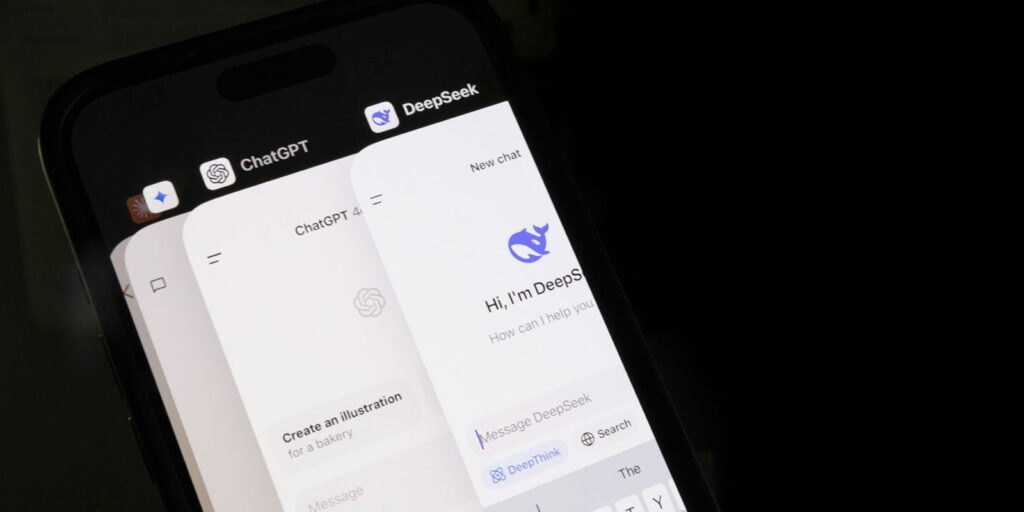

DeepSeek will help you make a bomb and hack government databases

Tests by security researchers revealed that DeepSeek failed literally every single safeguard requirement for a generative AI system, being fooled by even the most basic of jailbreak techniques.

This means that it can trivially be tricked into answering queries that should be blocked, from bomb recipes to guidance on hacking government databases …