Google’s new Gemma 3 AI model is optimized to run on a single GPU

Most new AI models go big—more parameters, more tokens, more everything. Google’s newest AI model has some big numbers, but it’s also tuned for efficiency. Google says the Gemma 3 open source model is the best in the world for running on a single GPU or AI accelerator. The latest Gemma model is aimed primarily at developers who need to create AI to run in various environments, be it a data center or a smartphone. And you can tinker with Gemma 3 right now.

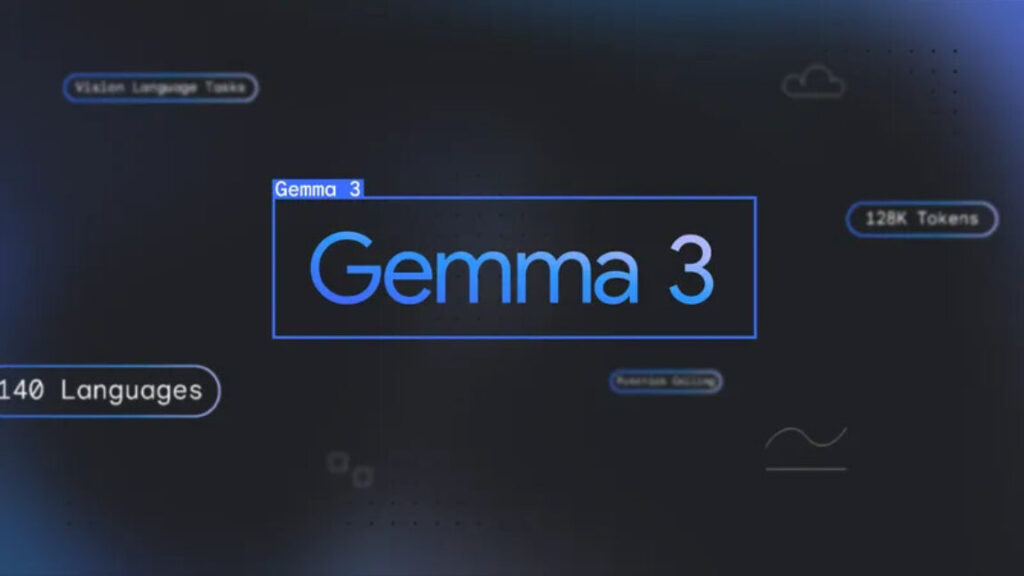

Google claims Gemma 3 will be able to tackle more challenging tasks compared to the older open source Google models. The context window, a measure of how much data you can input, has been expanded to 128,000 from 80,000 tokens in previous Gemma models. Gemma 3, which is based on the proprietary Gemini 2.0 foundation, is also a multimodal model capable of processing text, high-resolution images, and even video. Google also has a new solution for image safety called ShieldGemma 2, which can be integrated with Gemma to help block unwanted images in three content categories: dangerous, sexual, or violent.

Most of the popular AI models you’ve heard of run on collections of servers in a data center, filled to the brim with AI computing power. Many of them are far too large to run on the kind of hardware you have at home or in the office. The release of the first Gemma models last year gave developers and enthusiasts another low-hardware option to compete with the likes of Meta Llama3. There has been a drive for efficiency in AI lately, with models like DeepSeek R1 gaining traction on the basis of lower computing costs.