Making Apple silicon faster: 1 Threads and tasks

Over the last couple of weeks, I have mentioned briefly how macOS Sequoia brings a new version of the Swift programming language, and how one of its major advances is support for strict concurrency. This series of articles looks in more detail at how this fits in with other features to get work done faster on Apple silicon Macs, and sets Swift 6 in the context of their multicore performance.

CPU cores

All Macs introduced since 2006 have shipped with at least two CPU cores, and Apple silicon chips come with a minimum of seven cores. The days of a single CPU with one core are long since past, and all apps of any substance should now be coded the get the best performance from multiple cores.

Apple silicon chips are distinctive in having two different CPU core types, those designed for performance (P), and others for efficiency (E). Although the number and ratio of core types varies between different members of M-series families, some if not many apps could benefit from performing some of their work preferentially on each of the core types. Examples include apps that perform work that’s time-consuming, such as compressing/decompressing large files; providing the user with the option of performing this on P or E cores is beneficial.

Multitasking

In addition to running code simultaneously on multiple cores, each CPU core can run multiple tasks, although not all at the same time. This has traditionally been known as multitasking, and can be used to maximise the work performed by each core. The most obvious examples are with input/output such as network access. If an app needs to obtain data from a remote source, that can take several seconds. If the code waiting for that data blocks the core that it’s running on, forcing it to remain idle until the data is returned, then that core doesn’t perform any useful work for that period.

There are two ways that multitasking can be used to ensure that core continues to work while it awaits the data: in preemptive multitasking, the waiting code is automatically set aside and other code is run until the code is ready to work again; in cooperative multitasking, the waiting code yields for that period, allowing other code that can work to proceed. The latter is one of the situations at the heart of what has become known as concurrency, although the code in question isn’t actually run at the same time, as it would be on separate cores.

Terminology

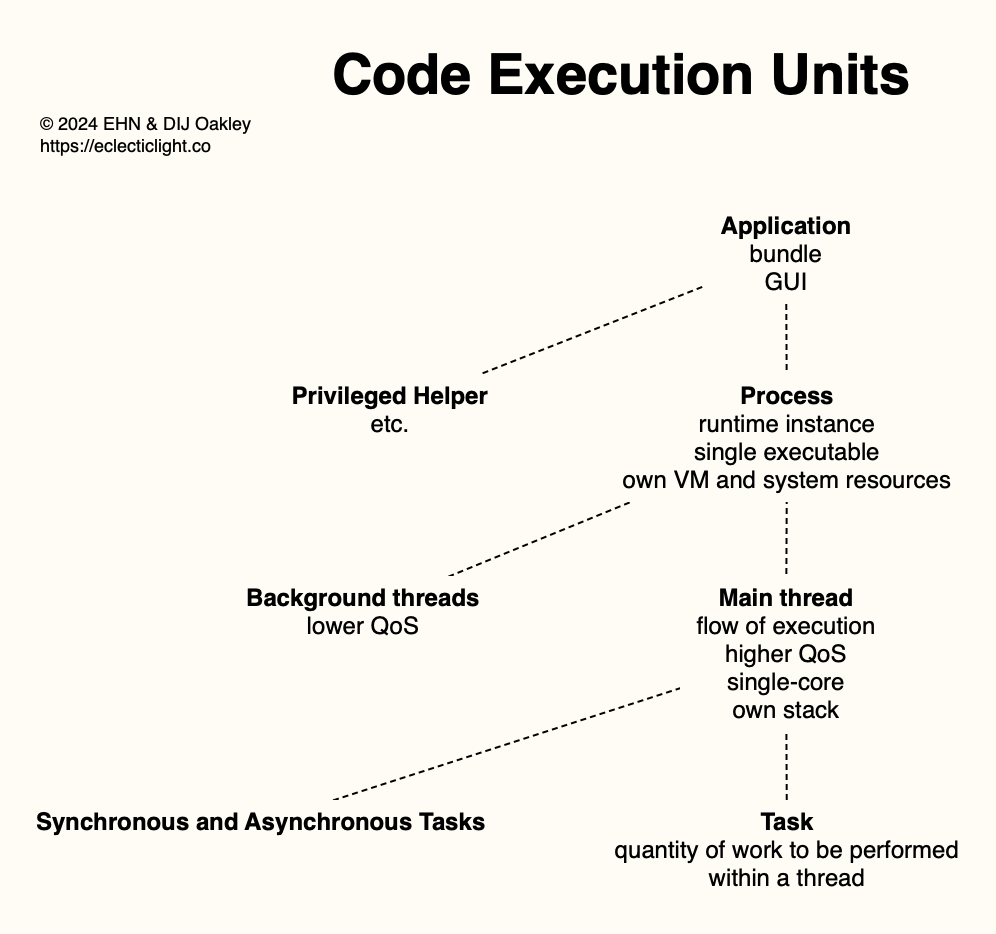

Many different mechanisms have been devised to make best use of multiple cores and multitasking, and most have developed their own terms, causing confusion. For the purposes of these articles I try to comply with Apple’s long-established terms, as shown in the diagram below.

In most cases, we’re considering applications with a GUI, and normally run from a bundle structure. These can in turn run their own code, such as privileged helper apps used to perform work that requires elevated privileges. In recent years, there has been a proliferation of additional executable code associated with many apps.

When that app is run, there’s a single runtime instance created from its single executable code. This is given its own virtual memory and access to system resources that it needs. This is a process, and listed as an entity in Activity Monitor, for example.

Each process has a main thread, a single flow of code execution, and may create additional threads, perhaps to run in the background. Threads don’t get their own virtual memory, but share that allocated to the process. They do, though, have their own stack. On Apple silicon Macs they’re easy to tell apart as they can only run on a single core, although they may be moved between cores, sometimes rapidly. In macOS, threads are assigned a Quality of Service (QoS) that determines how and where macOS runs them. Normally the process’s main thread is assigned a high QoS, and background threads that it creates may be given the same or lower QoS, as determined by the developer.

Within each thread are individual tasks, each a quantity of work to be performed. These can be brief sections of code and are more interdependent than threads. They’re often divided into synchronous and asynchronous tasks, depending on whether they need to be run as part of a strict sequence. Because they’re all running within a common thread, they don’t have individual QoS although they can have priorities. Tasks can readily become complex, and their relationships can result in blocking of a core and performance impact.

To confuse matters, you might come across a Mach task which sits in between a process and its threads, so is very different from the more usual meaning of the word task. To avoid any confusion, I won’t refer to that again in these articles.

Dispatch and QoS

There are multiple ways of creating and managing threads in macOS, some of the them ancient and now little-used. One more modern and popular method uses what was originally branded Grand Central Dispatch (GCD), and is now referred to simply as Dispatch. The following code creates a single queue of threads, setting the maximum number of concurrent threads, and their QoS.

let processingQueue: OperationQueue = {

let result = OperationQueue()

return result

}()

processingQueue.maxConcurrentOperationCount = numProcs

processingQueue.qualityOfService = QualityOfService.userInteractive

processingQueue.addOperation {

// run code

}

macOS then manages the threads in that queue, allocating each to a CPU core according to the QoS, the availability of cores, and the QoS of other threads.

QoS is normally chosen from the macOS standard list:

QoS 9 (binary 001001), named background and intended for threads performing maintenance, which don’t need to be run with any higher priority.

QoS 17 (binary 010001), utility, for tasks the user doesn’t track actively.

QoS 25 (binary 011001), userInitiated, for tasks that the user needs to complete to be able to use the app.

QoS 33 (binary 100001), userInteractive, for user-interactive tasks, such as handling events and the app’s interface.

There’s also a ‘default’ value of QoS between 17 and 25, an unspecified value, and in some circumstances you might come across others.

Managing a queue of threads for a single core type is relatively straightforward, but allocating threads to different core types requires a set of rules. Intel uses its Thread Director to provide hints to the core scheduler in Windows, a system that many have found opaque and frustrating. macOS appears simpler and more under the control of QoS set by the process when a thread is created, as I’ll demonstrate in the next article.

Key terms

A process is a single runtime instance of an app, with its own virtual memory and system resources.

Each process has a main thread, a single flow of code execution run on one core at a time, sharing virtual memory allocated to the process, but with its own stack.

Processes can create additional threads as they require, which can be scheduled to run in parallel on different cores.

Within each thread are tasks, quantities of work to be performed. These can be synchronous or asynchronous, and can have complex relationships.

Threads are assigned a Quality of Service (QoS) to determine how they are managed in queues, and which type of cores they are allocated to.

Further reading

Dispatch (Apple)

Threading (Apple)

Concurrency (Apple)

Concurrency (Swift 6)