Copy speeds of large and sparse files

I have recently seen reports of very low speeds when copying large files such as virtual machines, in some cases extending to more than a day, even when they should have been sparse files, so requiring less time than would be expected for their full size. This article teases out some tests and checks that you can use to investigate such unexpectedly poor performance.

Expected performance

Time taken to copy or duplicate files varies greatly in APFS. Copies and duplicates made within the same volume should, when performed correctly, be cloned, so should happen in the twinkling of an eye, and without any penalties for size. This is regardless of whether the original is a sparse file, or a reasonably sized bundle or folder, whose contents should normally be cloned too. If cloning doesn’t occur, then the method used to copy or duplicate should be suspected. Apple explains how this is accomplished using the Foundation API of FileManager, using a copyItem() method. This is also expected behaviour for the Finder’s Duplicate command.

Copying a file to a different volume, whether it’s in the same container, or even on a different disk, should proceed as expected, according to the full size of the file, unless the original is a sparse file and both source and destination use the APFS file system. When an appropriate method is used to perform the copy between APFS volumes, sparse file format should be preserved. This results in distinctive behaviour in the Finder: at first, its progress dialog reflects the full (non-sparse) size of the file to be copied, and the bar proceeds at the speed expected for that size. When the bar reaches a point equivalent to the actual (sparse) size of the file being copied, it suddenly shoots to 100% completion.

Copying a sparse file to a file system other than APFS will always result in it expanding to its full, non-sparse size, and the whole of that size will then be transferred during copying. There is no option to explode to full size on the destination, nor to convert format on the fly.

External SSDs

When copying very large files, external disk performance can depart substantially from that measured using relatively small transfer sizes. While some SSDs will achieve close to their benchmark write speed, others will slow greatly. Factors that can determine that include:

a full SLC cache,

failure to Trim,

small write caches/buffers in the SSD,

thermal throttling.

Many SSDs are designed to use fast single-level cell (SLC) write caching to deliver impressive benchmarks and perform well in everyday use. When very large files are written to them, they can exceed the capacity of the SLC cache, and write speed then collapses to less than a quarter of that seen in their benchmark performance. The only solution is to use a different SSD with a larger SLC cache.

Trimming is also an insidious problem, as macOS by default will only Trim HFS+ and APFS volumes when they’re mounted if the disk they’re stored on has an NVMe interface, and won’t Trim volumes on SSDs with a SATA interface. The trimforce command may be able to force Trimming on SATA disks, although that isn’t clear, and its man page is forbidding.

Trimming ensures that storage blocks no longer required by the file system are reported to the SSD firmware so they can be marked as unused, erased and returned for use. If Trimming isn’t performed by APFS at the time of mounting, those storage blocks are normally reclaimed during housekeeping performed by the SSD firmware, but that may be delayed or unreliable. If those blocks aren’t released, write speed will fall noticeably, and in the worst case blocks will need to be erased during writing.

For best performance, SATA SSDs should be avoided, and NVMe used instead. NVMe is standard for USB 3.2 Gen 2 10 Gb/s, USB4 and Thunderbolt SSDs, which should all Trim correctly by default.

Disk images

Since Monterey, disk images with internal APFS (or HFS+) file systems have benefitted from an ingenious combination of Trimming and sparse file format when stored on APFS volumes. This can result in great savings in disk space used by disk images, provided that they’re handled as sparse files throughout.

When a standard read-write (UDIF) disk image is first created, it occupies its full size in storage. When that disk image is mounted, APFS performs its usual Trim, which in the case of a disk image gathers all free space into contiguous storage. The disk image is then written out to storage in sparse file format, which normally requires far less than its full non-sparse size.

This behaviour can save GB of disk space in virtual machines, but like other sparse files, is dependent on the file remaining on APFS file systems, otherwise it will explode to its full non-sparse size. Any app that attempts to copy the disk image will also need to use the correct calls to preserve its format and avoid explosion.

Tools

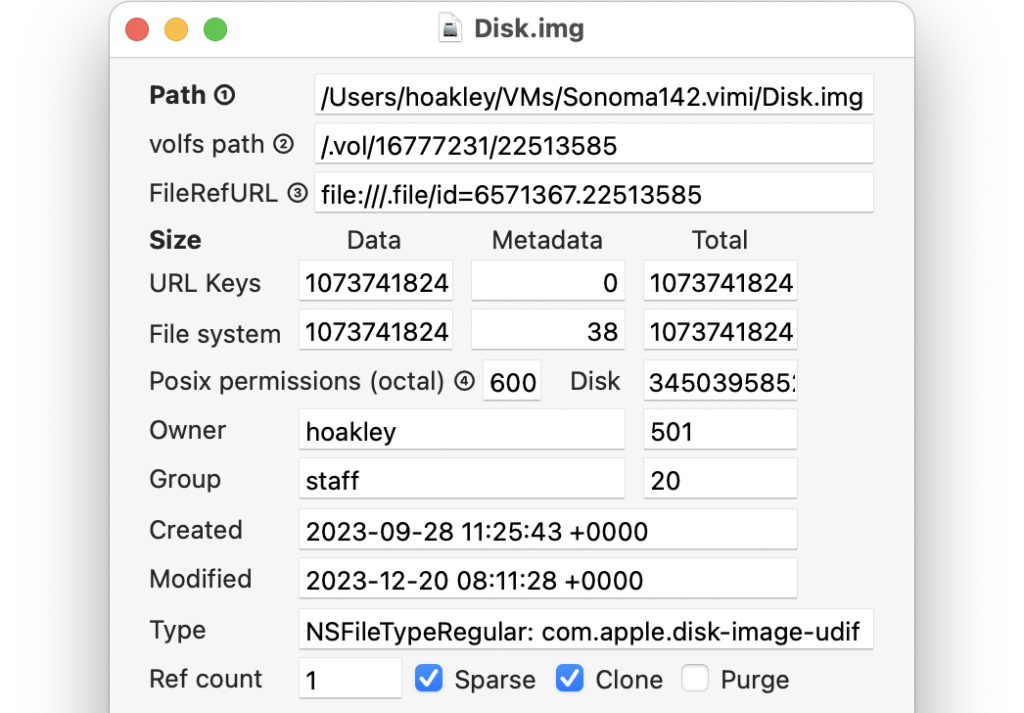

Precize reports whether a file is a clone, is sparse, and provides other useful information including full sizes and inode numbers.

Sparsity can create test sparse files of any size, and can scan for them in folders.

Mints can inspect APFS log entries to verify Trimming on mounting, as detailed here.

Stibium is my own storage performance benchmark tool that is far more flexible than others. Performing its Gold Standard test is detailed here and in its Help book.